ChatGPT and health information: A warning for lawmakers?

| Date: | 18 June 2023 |

By Michael Woldeyes LLM, International Human Rights Law, mengistumichael@gmail.com

The New York Times recently published an interesting article on the use of ChatGPT. The article read that a New York based attorney submitted a 10-page brief that cited and summarized more than half a dozen court decisions which were sourced from ChatGPT to a court of law. To the attorney’s horror, “no one …, not even the judge himself — could find the decisions or the quotations cited and summarized in the brief”. Apparently, most of the cases that were sighted by the lawyer were fabricated by the chatbot. The incident forced the lawyer, who has practiced law in the US for 3 decades, to appeal to the mercy of the court because his reliance on the application led him to submit a false information.

Stories about ChatGPT making up false information are not new. However, this particular story, I believe, is quite alarming. The career of a professional with robust work experience is in question since he failed to examine the authenticity of the information he sourced from ChatGPT because “he had never used ChatGPT, and, therefore, was unaware of the possibility that its content could be false.”

This case leads us to a number of questions when we think of accessing health information using ChagtGPT and other similar artificial intelligence (AI) programs. What does it imply regarding the use of AI programs to access health information? What happens when individuals use applications such as ChatGPT to self-diagnose? Should lawmakers regulate the use of AI programs to make sure that the “right” health information is delivered to individuals? This article tries to answer the last question by examining the shortcomings of ChatGPT.

What is ChatGPT?

ChatGPT is a chatbot which is driven by an AI technology and can answer questions and assist people with various tasks in a conversational way. It was launched in November last year by Open AI, a California based AI research and development company, and became the fastest-growing consumer application in history after registering 100 million active users in January 2023. The chatbot is based on Generative Pretrained Transformer 3 (GPT-3) language model which is “one of the largest and most powerful language processing AI models to date”. According to OpenAI, the dialogue format that the chatbot uses allows it “to answer follow up questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests”. ChatGPT’s widespread recognition is a result of its ability to produce superior, human-like replies to text prompts despite it being just one of several AI technologies created by OpenAI.[1]

Advantages for the health system and shortcomings

In general, the features of ChatGPT allow the AI program to make health systems around the world more efficient by improving diagnostics, detecting medical errors, and reducing the burden of paperwork.[2] It also helps in educating medical students and improving the skills of practitioners such as physicians and nurses. In particular, the AI program is valuable in disseminating health information on disease prevention and treatment and health promotion for all classes of people across the high income and low income countries. For example, the chatbot can provide information on healthy lifestyle choices, the importance of vaccination, the advantages of regular screening tests, and strategies for reducing risk factors for communicable and non-communicable diseases.[3]

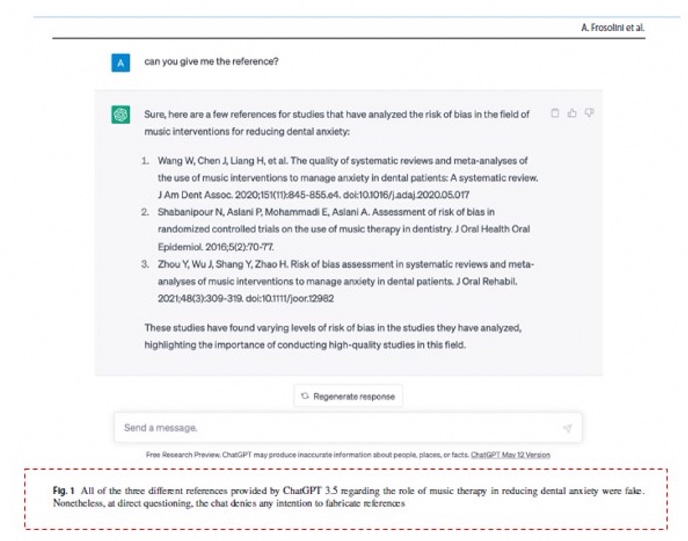

Even though ChatGPT’s promising benefits for the health system are undeniable, the program comes with its downsides which cannot be ignored and are alarming when it comes to health. As shown in the New York Times’ story which is indicated above and proved in several instances, the chatbot has the tendency to provide false information that may mislead its users.[4] In this regard, a research that examined 40 thousand questions and their corresponding answers from human experts and ChatGPT covering a wide range of domains including medicine, law, and psychology, found out that ChatGPT may fabricate facts in order to give an answer. The research concluded that the chatbot’s tendency to present false answers is triggered when it tries to answer a question that requires professional knowledge from a particular field.[5] It also describes that the AI program may fabricate facts when a user asks a question that has no existing answer. This has been revealed in several instances. For instance, ChatGPT was found fabricating The Guardian articles that were never written and feeding them to researchers. As revealed by The Washington Post, it is also capable of citing fake articles as evidence. This means that there is a high probability that users of the chatbot may risk getting false information on health and healthy practices. A recent publication on Annals of Biomedical Engineering by A. Frosolini et al. proves this.[6] As shown in the screen shot below, the researches caught the chatbot making up information about dental anxiety.

A warning for lawmakers?

Even though AI has come a long way, ChatGPT is in its infancy. As much as it has become very helpful in various matters its inaccuracies and fabrication of stories pose a great risk for users who want to access health information. By accessing made up data and information, users of the AI program risk falling into unhealthy habits and practices.

It may be said that the functions of ChatGPT will get better as it progresses. However, whether the fabrication of stories and data will stop when the chatbot advances is a mystery. In addition, other companies such as Google and Microsoft are developing GPTs that have chatbots just like ChatGPT. As a result, even if ChatGPT advances to a stage where it stops fabricating data, other AI tools which are at the lowest development stage will be widely available for the public especially for those who do not want to pay or cannot pay for using the advanced services of the AI programs. Therefore, the risk of accessing false health information from AI programs will be a reality for a long period of time. This, I believe, calls for the attention of law makers, nationally and internationally, on the risks involving the use of ChatGPT and other similar AI programs to access health information. The stated problem with AI programs such as ChatGPT has the potential to affect the health of a large number of people all over the world. As a result, Law makers should take heed of these facts and come up with solutions to address the problems with the chatbot and similar platforms.

[1] Mohad Javaid, Abid Haleem, “Ravi Pratap Singh, ‘ChatGPT for healthcare services: An emerging stage for an innovative perspective’ [2023] 3 BenchCouncil Transactions on Benchmarks, Standards and Evaluations 5.

[2] Jan Homolak, “Opportunities and risks of ChatGPT in medicine, science, and academic publishing: a modern Promethean dilemma” [2023] 64 Croat Medical Journal 1.

[3] Som S. Biswas, “Role of ChatGPT in Public Health” [2023] 51 Anals of Biomedical Engineering 868.

[4] Naveen Manohar, Shruthi S. Prasad, “Use of ChatGPT in Academic Publishing: A Rare Case of Seronegative Systemic Lupus Erythematous in a Patient with HIV Infection” [2023] 15 (2) Cureus 7. See also Andrea Frosolini, Paolo Gennaro, Flavia Cascino, Guido Gabriele, “In Reference to ‘Role of ChatGPT in Public Health’, to highlight the AI’s Incorrect Reference Generation” [2023] Annals of Biomedical Engineering [unnumbered] Accessible on https://link.springer.com/article/10.1007/s10439-023-03248-4.

[5] Biyang Guo, Xin Zhang, Ziyuan Wang, Minqi Jiang, Jinran Nie, Yuxuan Ding, Jianwei Yue, Yupeng Wu, “How Close is ChatGPT to Human Experts? Comparison Corpus, Evaluation, and Detection” [2023] arXiv:2301.07597v1 6.

[6] Andrea Frosolini, Paolo Gennaro, Flavia Cascino, Guido Gabriele (n 4).