Responsible AI should be capable of logical reasoning

New EU regulations will limit the use of AI, and works towards more responsible AI systems. Bart Verheij, Professor of AI & Argumentation at the University of Groningen, thinks that responsible AI should be capable of logical reasoning. That way, an AI system will be able to explain itself and be corrected where necessary.

FSE Science News Room | Charlotte Vlek

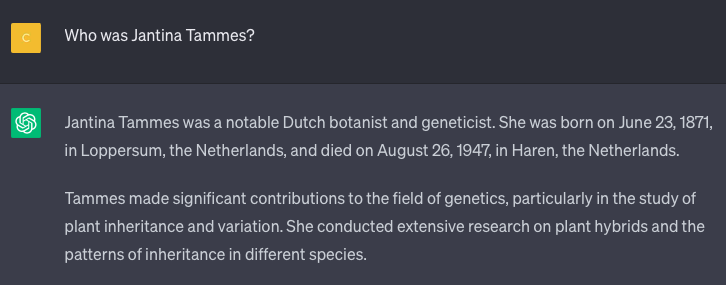

Hallucination is a nasty problem among AI systems: these may provide a very confident answer which is grammatically correct and looks good, but has no meaning. For instance, ChatGPT’s response to the question ‘Who was Jantina Tammes Jantina Tammes?’ looks good at first glance, but states that she was born in Loppersum (incorrect) and passed away in Haren (also incorrect). In the US, a lawyer used ChatGPT to write his plea in a suit against an airline, but the plea turned out to be full of made-up sources. The judge was not amused.

(text continues below image)

Machine Learning

ChatGPT uses Machine Learning: a popular method in AI in which a computer is trained on enormous amounts of data. Based on these data, the computer learns to execute a concrete task. In essence, this is all statistics, where the computer receives a huge number of examples and is thus able to produce the most likely response to a new assignment.

In the case of ChatGPT, the goal of the training was to produce the most likely next word in a conversation. Based on this, it’s not surprising that ChatGPT tends to hallucinate sometimes: ChatGPT doesn’t ‘know’ anything about Jantina Tammes, but only produces what occurs most in the datasets that it was fed, and apparently Loppersum and Haren were words with a high prevalence in this case.

Verheij: ‘But sometimes, such a language model turns out to be fairly good at tasks for which it was not trained, such as adding and subtracting. And sometimes ChatGPT produces a very exact argument, whereas in other instances, it is not capable of logical reasoning at all. No one understands exactly why and when, and that renders such a system unreliable.

Knowledge and data

Verheij recognizes two main trends within AI: knowledge systems and data systems. A knowledge system operates on the basis of logic: you put knowledge and rules in, and what it returns is always correct, and — if so desired— can be explained. These kinds of systems are built by people, from the ground up. Data systems work with enormous datasets, and brew something from this on their own. For instance, with the use of Machine Learning.

Modern AI in particular is not good at explaining itself

Under the supervision of Bart Verheij, PhD-student Cor Steging investigated how Machine Learning deals with things like rules and logic. Steging took a rule from Dutch law that stipulates when and what counts as a wrongful act. In doing so, he generated the ‘perfect dataset’ of examples, and studied what a computer distills from that dataset.

After training on this ‘perfect dataset’, the computer programme was able to indicate with high accuracy, whether or not something was wrongful. So far so good. But the programme did not learn the correct underlying rules from the dataset. Verheij: ‘In particular it failed to learn the exact combination of logical conditions which are needed in law. And values of limits, such as age limits, are not recognized correctly.’

‘Modern AI in particular, which is so powerful, is not good at explaining itself. It’s a black box.’ And that needs to change, according to Verheij. That’s why in Groningen, researchers are working on computational argumentation. ‘It would be great if humans and machines could support each other in a critical conversation. Because only humans understand the human world, and only machines are capable of processing a lot of information so quickly.

| Last modified: | 27 June 2024 3.45 p.m. |

More news

-

21 November 2024

Dutch Research Agenda funding for research to improve climate policy

Michele Cucuzzella and Ming Cao are partners in the research programme ‘Behavioural Insights for Climate Policy’

-

13 November 2024

Can we live on our planet without destroying it?

How much land, water, and other resources does our lifestyle require? And how can we adapt this lifestyle to stay within the limits of what the Earth can give?

-

13 November 2024

Emergentie-onderzoek in de kosmologie ontvangt NWA-ORC-subsidie

Emergentie in de kosmologie - Het doel van het onderzoek is oa te begrijpen hoe ruimte, tijd, zwaartekracht en het universum uit bijna niets lijken te ontstaan. Meer informatie hierover in het nieuwsbericht.