Recurrent Competitive Networks

This work is carried out in collaboration with the Institute of Neuroinformatics (University of Zurich and ETH Zurich, CH)

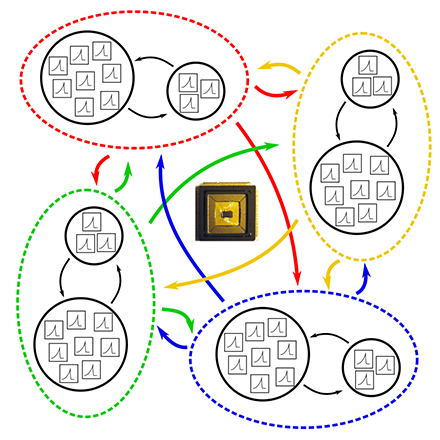

In this project, we study the implementation of Recurrent Competitive Networks (RCNs) [1] and their constituent parts in analog neuromorphic hardware. Anatomical studies of the brain show that many cortical circuits have a common, repeated connectivity motif – inhibitory and excitatory populations of neurons connect to each other recurrently, randomly, and in relatively consistent proportions. These connections are subject to Hebbian learning and homeostatic control. An RCN is a particular implementation of these networks which has been shown to have interesting theoretical properties. Small RCNs on the order of hundreds of neurons can be used to represent variables. The dynamics of these systems allow small RCNs to learn the topology of their input and perform signal processing tasks such as cue integration and decision making [2]. Large RCNs can represent multiple variables and learn the relationships between these variables [1], [3]. These large networks could potentially be used for tasks such as sensor fusion [4] or motor control.

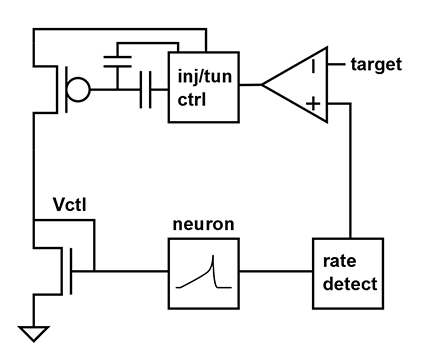

This project has two primary goals. First, we will recreate the low-level mechanisms that enable the signal processing properties of RCNs. We will initially focusing on homeostasis, which is a control mechanism used to ensure that neurons fire at a desired average rate. Some synaptic learning rules are inherently unstable, causing runaway potentiation of synapses, and homeostasis is required to stabilize population dynamics.

Many neuromorphic systems are implemented in analog hardware to reduce the system’s overall power consumption [5]. In order to achieve adaptation timescales typically associated with homeostasis, we will use a special type of transistor known as a floating-gate transistor, which is essentially a transistor with modifiable characteristics. Floating-gate transistors have been used for many years in adaptive [6] and neuromorphic [7] circuits, so they are ideal for this application. We will control the average firing rate of an analog neuron by slowly modifying a floating-gate transistor's characteristics.

The project’s second goal is to evaluate the implementation of RCNs on current neuromorphic hardware. Over the past few years, a number of research groups around the world have created different general-purpose neuromorphic hardware platforms. We will implement RCN network topologies on an existing neuromorphic platform and compare the system’s performance to theoretical models. We believe this evaluation of current systems will provide useful design goals for the next generation of neuromorphic hardware.

[1] Jug, Florian. “On Competition and Learning in Cortical Structures”. Ph.D. Dissertation, ETH Zurich, 2012/1.

[2] Jug, Florian, Matthew Cook, and Angelika Steger. "Recurrent competitive networks can learn locally excitatory topologies." Neural Networks (IJCNN), The 2012 International Joint Conference on. IEEE, 2012.

[3] Cook, Matthew, et al. "Unsupervised learning of relations." Artificial Neural Networks–ICANN 2010. Springer Berlin Heidelberg, 2010. 164-173.

[4] Cook, Matthew, et al. "Interacting maps for fast visual interpretation." Neural Networks (IJCNN), The 2011 International Joint Conference on. IEEE, 2011.

[5] Chicca, Elisabetta, et al. "Neuromorphic electronic circuits for building autonomous cognitive systems." (2014): 1-22.

[6] Hasler, Paul, Bradley A. Minch, and Chris Diorio. "Adaptive circuits using pFET floating-gate devices." Advanced Research in VLSI, 1999. Proceedings. 20th Anniversary Conference on. IEEE, 1999.

[7] Brink, Stephen, et al. "A learning-enabled neuron array IC based upon transistor channel models of biological phenomena." Biomedical Circuits and Systems, IEEE Transactions on 7.1 (2013): 71-81.

| Last modified: | 18 September 2020 08.54 a.m. |